The Scale of the Problem

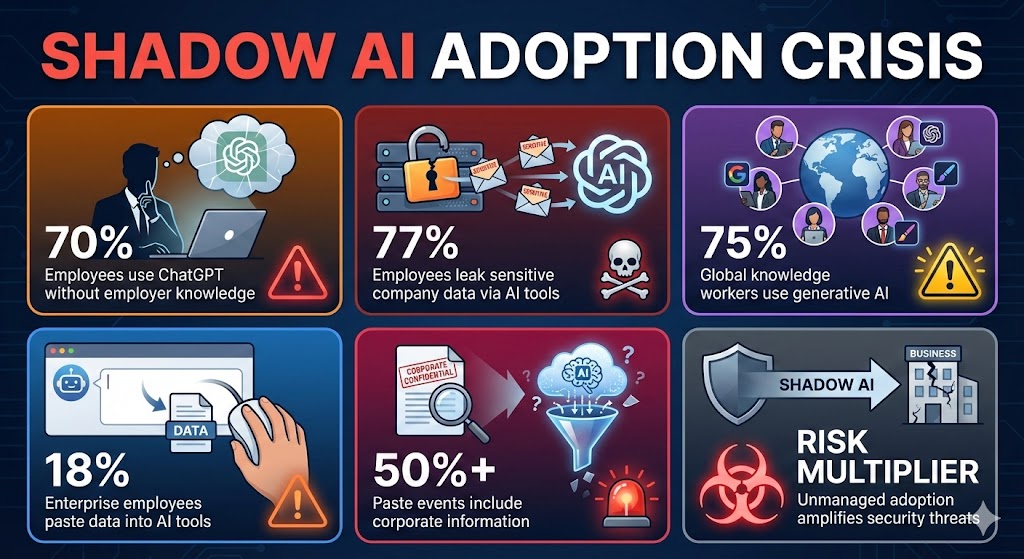

In conference rooms and cubicles across corporate America, a quiet security crisis is unfolding. Approximately 70% of employees who use ChatGPT and other AI tools do so without informing their managers or bosses (1), creating what security experts call “shadow AI” — the unauthorized use of personal AI accounts for work tasks.

The statistics are alarming. A 2025 report from LayerX Security found that 77% of employees share sensitive company data through ChatGPT and AI tools, with approximately 18% of enterprise employees pasting data into generative AI tools, and more than half of those paste events including corporate information2. By 2024, 75% of global knowledge workers reported using generative AI (3), with adoption continuing to accelerate.

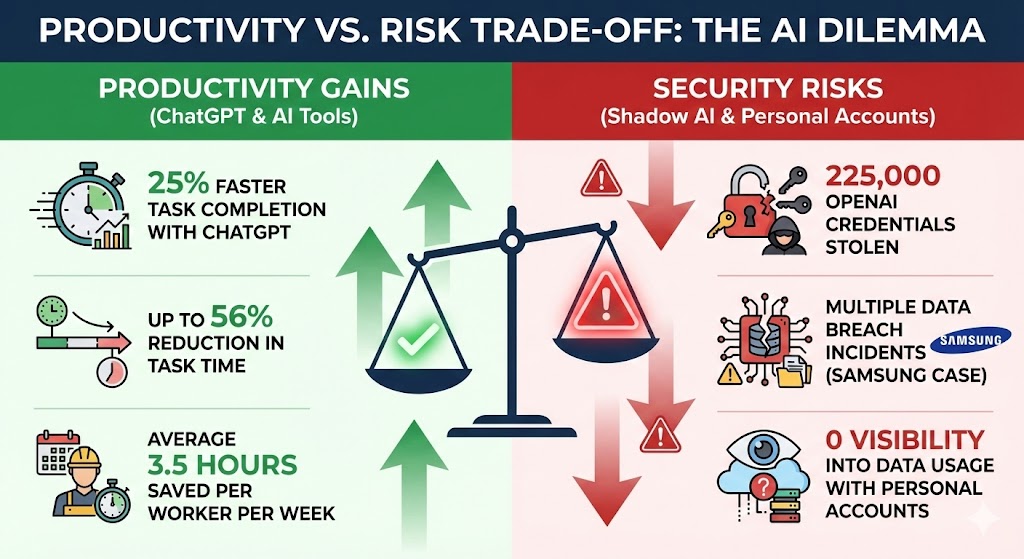

The rapid, uncontrolled spread isn’t surprising. Research from Harvard, MIT, and BCG finds that consultants using ChatGPT complete tasks up to 25% faster than those who do not use AI, with some studies showing task time dropping by as much as 56% (4). When productivity gains are this significant, employees naturally gravitate toward these tools — with or without corporate approval.

The Email Example: A Perfect Storm of Convenience and Risk

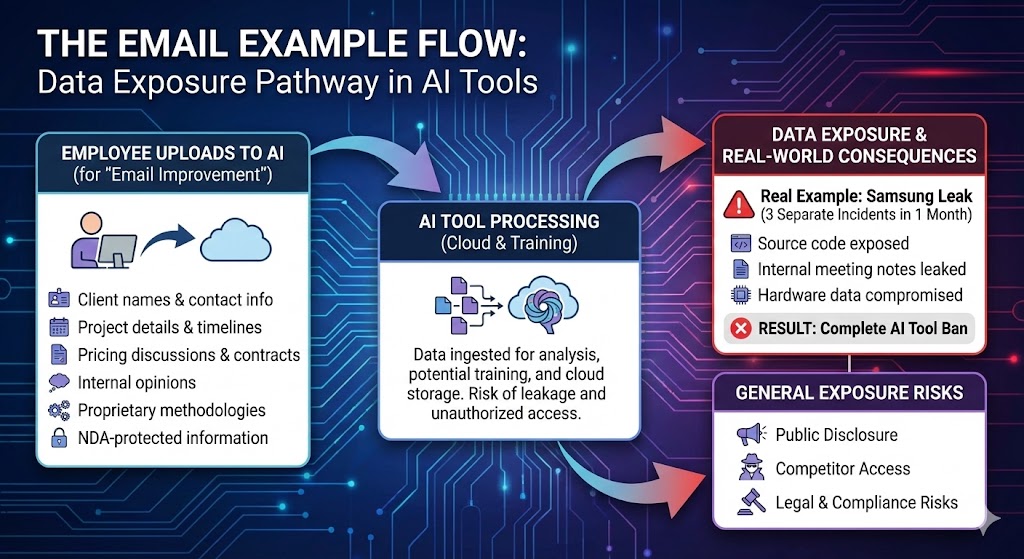

The most common use case illustrates the problem perfectly. Workers most frequently use ChatGPT to aid with writing and refining emails, letters, and reports (5). On the surface, this seems harmless. An employee drafts a response to a client, pastes it into ChatGPT with the prompt “make this more professional,” receives polished text, and sends it off. The entire process takes 30 seconds.

But consider what just happened:

The employee may have just uploaded:

- Client names and contact information

- Project details and timelines

- Pricing discussions and contract terms

- Internal opinions about clients or competitors

- Proprietary methodologies or processes

- Information subject to NDAs or confidentiality agreements

All of this data now exists on OpenAI’s servers, processed through their systems, and potentially used in ways the company never authorized.

A real-world example demonstrates the stakes. Samsung faced a significant data leak when employees inadvertently exposed sensitive company information while using ChatGPT, with employees leaking sensitive data on three separate occasions within a month including source code, internal meeting notes, and hardware-related data (6). Samsung’s response was swift: they banned generative AI tools and began developing an in-house solution.

The Security Vulnerabilities of Personal AI Accounts

When employees use personal AI accounts for work, they create multiple security vulnerabilities:

1. Data Retention and Training

Most consumer AI services retain conversation history and may use inputs to improve their models. While OpenAI has policies around data usage, researchers demonstrated that by prompting ChatGPT to repeat specific words indefinitely, they could extract verbatim memorized training examples including personal identifiable information, NSFW content, and proprietary literature (7).

2. Credential Compromise

Over 225,000 sets of OpenAI credentials were discovered for sale on the dark web, stolen by various infostealer malware, with LummaC2 being the most prevalent (8). If an employee’s personal account is compromised, attackers gain access to every conversation — including those containing company data.

3. Lack of Access Controls

Personal accounts have no integration with corporate identity management, no audit trails tied to company systems, and no ability to revoke access when employees leave. Organizations have zero visibility into what data is being shared or how it’s being used.

4. Supply Chain Vulnerabilities

In November 2025, a third-party analytics provider used by OpenAI, Mixpanel, suffered a security incident where attackers gained unauthorized access and exported datasets containing limited customer identifiable information and analytics information (9). Even when the core AI service isn’t directly breached, third-party vendors create additional risk vectors.

5. Regulatory Compliance Failures

The CEO of LayerX Security noted that having enterprise data leak via AI tools can raise geopolitical issues, regulatory and compliance concerns, and lead to corporate data being inappropriately used for training if exposed through personal AI tool usage (10). For organizations subject to HIPAA, GDPR, PCI DSS, or other regulations, employee use of personal AI accounts may constitute a direct compliance violation.

The Alternative: Private and Self-Hosted LLMs

Organizations serious about AI adoption while maintaining security have several alternatives to consumer AI services:

Private Cloud-Managed Solutions

Companies like OpenAI, Anthropic, and others offer enterprise versions with enhanced security:

- Data isolation: Conversations aren’t used for training

- Admin controls: IT can manage access and see usage patterns

- Compliance certifications: HIPAA, SOC 2, and other frameworks

- Audit trails: Full logging of who accessed what and when

Self-Hosted Open-Source Models

Organizations can deploy models like Llama, Mistral, or others on their own infrastructure:

- Complete control: Data never leaves company systems

- Customization: Fine-tune models for specific needs

- No per-token costs: After initial setup, usage is “free”

- Compliance: Easier to meet regulatory requirements

Hybrid Approaches

Many organizations adopt a tiered strategy:

- Public APIs for non-sensitive tasks

- Private cloud instances for moderate-sensitivity work

- Fully self-hosted models for highly confidential operations

The Costs of Private LLMs

The question that stops most organizations: “What will this cost?“

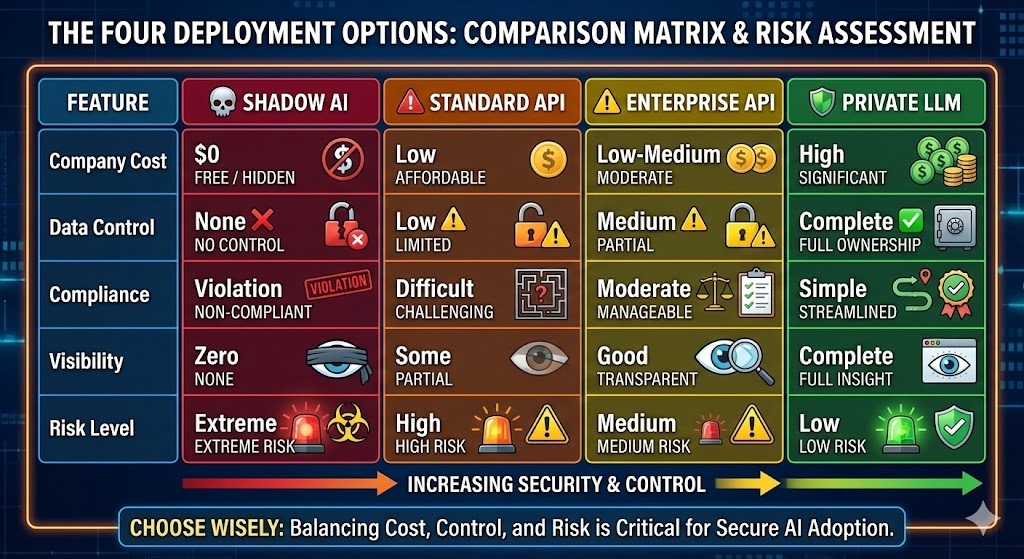

Understanding AI deployment costs requires comparing four distinct approaches: allowing employees to continue using personal accounts (shadow AI), implementing company-managed cloud APIs, adopting enterprise-grade cloud services, or deploying private infrastructure. Each option involves different cost structures and risk profiles.

The Four Deployment Options

Before examining specific costs, it’s important to understand what organizations are actually comparing:

Shadow AI (Current State)

Employees using personal ChatGPT, Claude, or other AI accounts to accomplish work tasks. The company pays nothing directly, but assumes significant security and compliance risks.

Standard Cloud API

The company officially subscribes to commercial AI services (i.e., OpenAI API, Anthropic API, Google Gemini) with standard terms. Data is sent to vendor servers over the internet, typically on a pay-per-use basis.

Enterprise Cloud API

Business-tier cloud services with enhanced security features, compliance certifications (SOC 2, HIPAA-eligible), data usage restrictions (not used for model training), and administrative controls. Similar per-token pricing to standard APIs but with contractual protections.

Private Self-Hosted LLM

Open-source models (Llama, Mistral, or similar) deployed on company-owned infrastructure, either in private data centers or dedicated cloud environments. Data never leaves company control.

Calculating AI Usage Costs

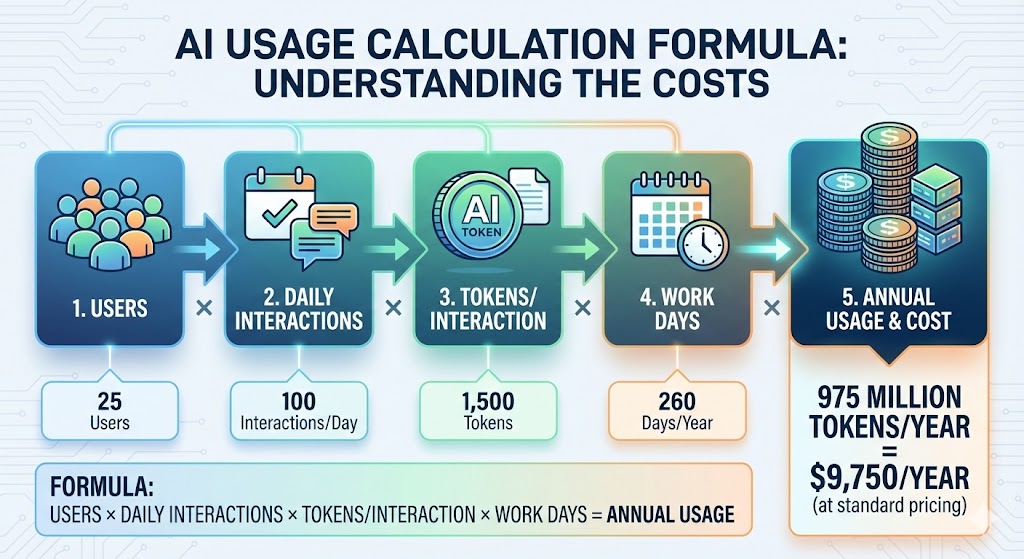

To understand when each option makes financial sense, we need to calculate realistic usage patterns. Most organizations follow this general formula:

Users × Daily Interactions × Tokens per Interaction × Work Days = Annual Token Usage

A typical knowledge worker might have:

- 100 AI interactions per day (emails, document drafting, research, brainstorming)

- 1,500 tokens per interaction (combined input prompt and AI response)

- 260 work days per year (standard 5-day work week, 52 weeks)

Let’s examine costs at three different organizational scales:

Small Team: 5 Users

Annual Token Usage:

- 5 users × 100 interactions/day × 1,500 tokens/interaction = 750,000 tokens/day

- 750,000 tokens/day × 260 work days = 195 million tokens/year

Cost Comparison:

Shadow AI:

- Annual Cost: $0 (to company)

- Data Control: None ❌

- Analysis: Hidden risk cost of $100K-400K annually

Standard Cloud API:

- Annual Cost: $1,950

- Data Control: Low ⚠️

- Analysis: Cost-effective for this scale

Enterprise Cloud API:

- Annual Cost: $2,000-3,000

- Data Control: Medium ⚠️

- Analysis: Best option for small teams

Private LLM:

- Annual Cost: $70,000-130,000 (first year)

- Data Control: Complete ✅

- Analysis: Not cost-justified at this scale

Note: Standard pricing assumes $0.01 per 1,000 tokens (blended rate). Actual costs vary by model and provider: GPT-4o ranges from $2.50-10 per million tokens depending on input vs. output.

Recommendation for 5 users: Enterprise Cloud API provides the best balance of cost and security. Private LLMs are not economically justified at this scale unless dealing with extremely sensitive data requiring air-gapped systems.

Medium Team: 25 Users

Annual Token Usage:

- 25 users × 100 interactions/day × 1,500 tokens/interaction = 3.75 million tokens/day

- 3.75 million tokens/day × 260 work days = 975 million tokens/year

Cost Comparison:

Shadow AI:

- Annual Cost: $0 (to company)

- Data Control: None ❌

- Analysis: Hidden risk cost of $500K-2M annually

Standard Cloud API:

- Annual Cost: $9,750

- Data Control: Low ⚠️

- Analysis: Economical but risky

Enterprise Cloud API:

- Annual Cost: $10,000-15,000

- Data Control: Medium ⚠️

- Analysis: Good balance

Private LLM:

- Annual Cost: $70,000-130,000 (first year), $40,000-80,000 (year 2+)

- Data Control: Complete ✅

- Analysis: 6-8 year payback on cost alone

Recommendation for 25 users: This is the decision point. Enterprise Cloud APIs remain more cost-effective, but organizations in regulated industries (healthcare, finance, legal) should seriously consider private LLMs. The cost premium of $30,000-70,000 annually buys complete data control and simplified compliance.

Larger Team: 100 Users

Annual Token Usage:

- 100 users × 100 interactions/day × 1,500 tokens/interaction = 15 million tokens/day

- 15 million tokens/day × 260 work days = 3.9 billion tokens/year

Cost Comparison:

Shadow AI:

- Annual Cost: $0 (to company)

- Data Control: None ❌

- Analysis: Hidden risk cost of $2M-8M annually

Standard Cloud API:

- Annual Cost: $39,000

- Data Control: Low ⚠️

- Analysis: Significant exposure

Enterprise Cloud API:

- Annual Cost: $40,000-60,000

- Data Control: Medium ⚠️

- Analysis: Still viable

Private LLM:

- Annual Cost: $150,000-400,000 (first year), $100,000-250,000 (year 2+)

- Data Control: Complete ✅

- Analysis: 3-5 year payback

Recommendation for 100 users: Private LLMs become economically competitive. The cost differential narrows significantly, and the security benefits become compelling even for organizations without strict regulatory requirements.

Self-Hosted Deployment Cost Breakdown

For organizations seriously considering private infrastructure, here’s what the investment actually covers:

Small-Scale Deployment (7B-13B parameter models)

Suitable for: 25-50 users with moderate AI usage

- Hardware: Single high-end GPU (A100 80GB)

- Cloud: $3-5/hour = $2,160-3,600/month

- On-premise purchase: $10,000-15,000

- Storage: 500GB-1TB for model weights and conversation logs

- Networking: Standard enterprise bandwidth

- Staff: 0.25-0.5 FTE for maintenance and optimization

- Total First Year: $30,000-50,000 (setup) + $40,000-80,000 (operations)

- Total Year 2+: $40,000-80,000 annually

Medium-Scale Deployment (30B-70B parameter models)

Suitable for: 100-300 users or specialized high-performance needs

- Hardware: 8-16 GPUs (A100 or H100)

- Cloud cluster: tens of thousands per month

- On-premise: $80,000-240,000

- Storage: 2-5TB with redundancy

- Networking: High-speed interconnects (10-25 GbE)

- Staff: 1-2 FTE for MLOps, maintenance, security

- Total First Year: $100,000-300,000 (setup) + $150,000-400,000 (operations)

- Total Year 2+: $150,000-400,000 annually

Enterprise-Scale Deployment

Suitable for: 500+ users, mission-critical applications, multi-model deployment

- Hardware: Multi-node GPU clusters with redundancy

- Storage: Petabyte-scale with backup and disaster recovery

- Networking: 100GbE dedicated infrastructure

- Staff: Dedicated team of 3-5 (MLOps engineers, infrastructure specialists)

- Total First Year: $500,000-2,000,000

- Total Year 2+: $500,000-1,500,000 annually

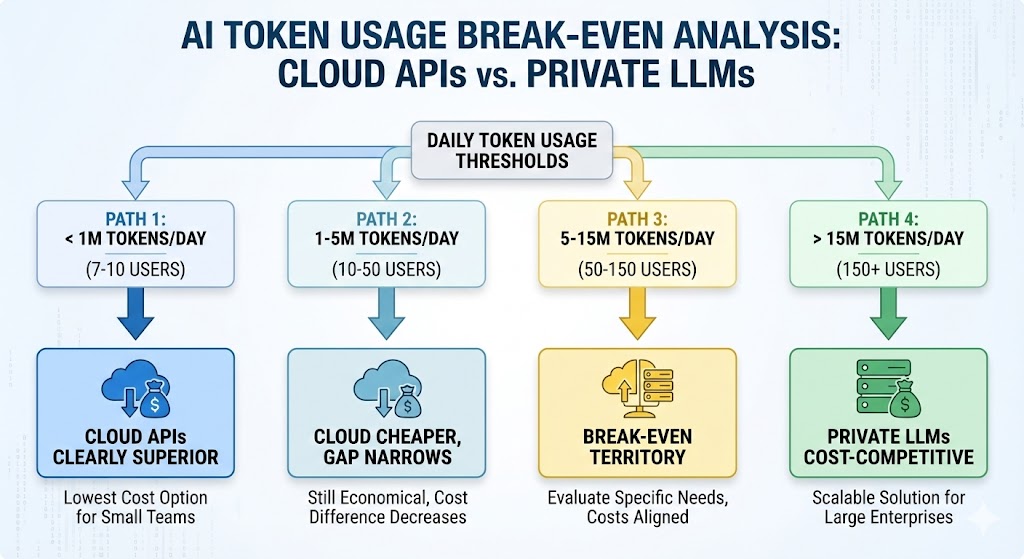

When Does Private LLM Make Economic Sense?

Research shows that a private LLM starts to pay off when you process over 2 million tokens per day or require strict compliance like HIPAA or PCI, with most teams seeing payback within 6 to 12 months when compliance value is factored in (11).

Pure Cost-Based Decision:

- Under 1 million tokens/day (roughly 7-10 heavy users): Cloud APIs are clearly superior

- 1-5 million tokens/day (10-50 users): Cloud APIs still cheaper, but gap narrows

- 5-15 million tokens/day (50-150 users): Break-even territory; decision depends on growth trajectory

- Over 15 million tokens/day (150+ users): Private LLMs become cost-competitive on economics alone

Compliance-Based Decision:

If your organization handles:

- Protected Health Information (PHI) under HIPAA

- Payment card data under PCI DSS

- Personally Identifiable Information under GDPR

- Financial records under SOX or FINRA

- Classified or CUI (Controlled Unclassified Information)

Then private LLMs may be justified at much smaller scales because cloud APIs—even enterprise-grade ones—create compliance complexity. The cost of a single compliance violation often exceeds the entire annual cost of private infrastructure.

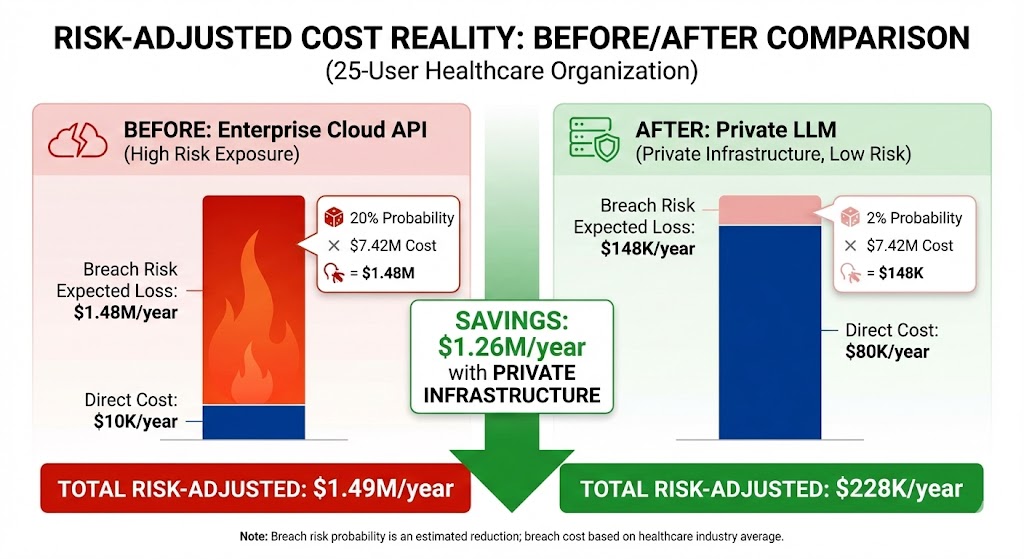

Risk-Adjusted Decision:

Even when cloud APIs appear cheaper on paper, the risk-adjusted calculation changes dramatically:

Example: 25-user organization in healthcare

Option A: Enterprise Cloud API

- Direct cost: $10,000/year

- Residual breach risk: 15-30% (despite enterprise features)

- Expected breach cost: $7.42M × 20% probability = $1.48M risk-adjusted cost

- Total risk-adjusted cost: $1.49M/year

Option B: Private LLM

- Direct cost: $80,000/year (ongoing)

- Residual breach risk: <2% (data never leaves infrastructure)

- Expected breach cost: $7.42M × 2% probability = $148K risk-adjusted cost

- Total risk-adjusted cost: $228K/year

From this perspective, private infrastructure isn’t more expensive—it’s dramatically cheaper once risk is properly valued.

Hidden Costs to Consider

Both cloud and private deployments have costs beyond the obvious:

Cloud API Hidden Costs:

- Data egress fees (extracting data from vendor)

- Vendor lock-in (retraining workflows if switching)

- Compliance audit costs (proving data handling)

- Legal review of vendor terms

- Incident response if vendor is breached

Private LLM Hidden Costs:

- Model updates and fine-tuning

- Security hardening and penetration testing

- Disaster recovery and backup infrastructure

- Staff training and knowledge development

- Opportunity cost of infrastructure team focus

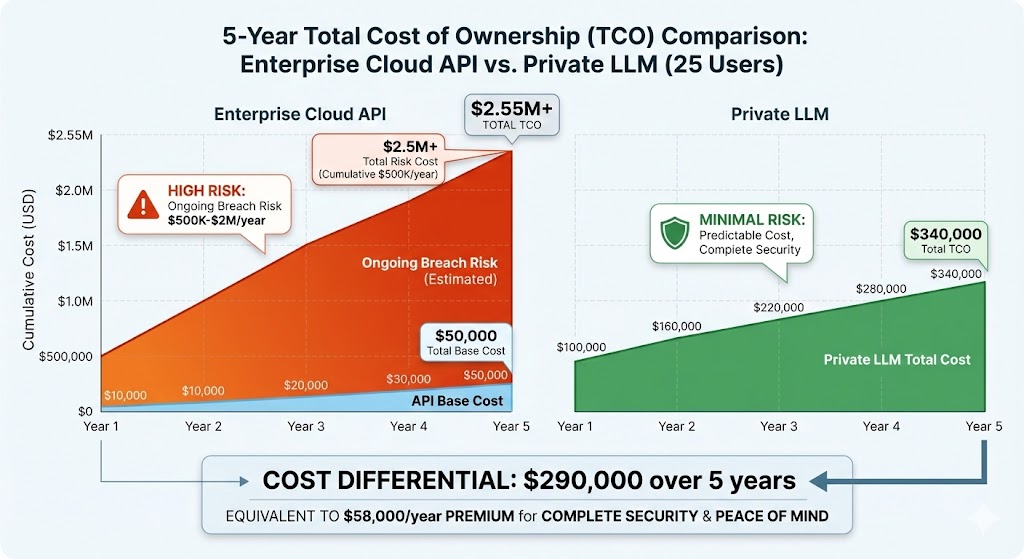

The Real Economic Comparison

Looking at a realistic 25-user organization over 5 years:

Enterprise Cloud API (5 years):

- Year 1-5: $10,000/year = $50,000 total

- Plus: Risk of $500K-2M breach annually

- Plus: Ongoing compliance complexity

- 5-year total: $50,000 + significant ongoing risk

Private LLM (5 years):

- Year 1: $100,000 (setup + operations)

- Year 2-5: $60,000/year = $240,000

- 5-year total: $340,000 with minimal residual risk

Cost differential: $290,000 over 5 years

Is complete data control, simplified compliance, and dramatically reduced breach risk worth $58,000 per year? For many organizations, especially those in regulated industries, the answer is unequivocally yes.

For organizations without regulatory requirements and with limited AI usage, enterprise cloud APIs remain the practical choice. But as AI usage grows and data sensitivity increases, the economics shift decisively toward private infrastructure.

The question isn’t whether organizations can afford to implement private AI infrastructure. It’s whether they can afford not to.

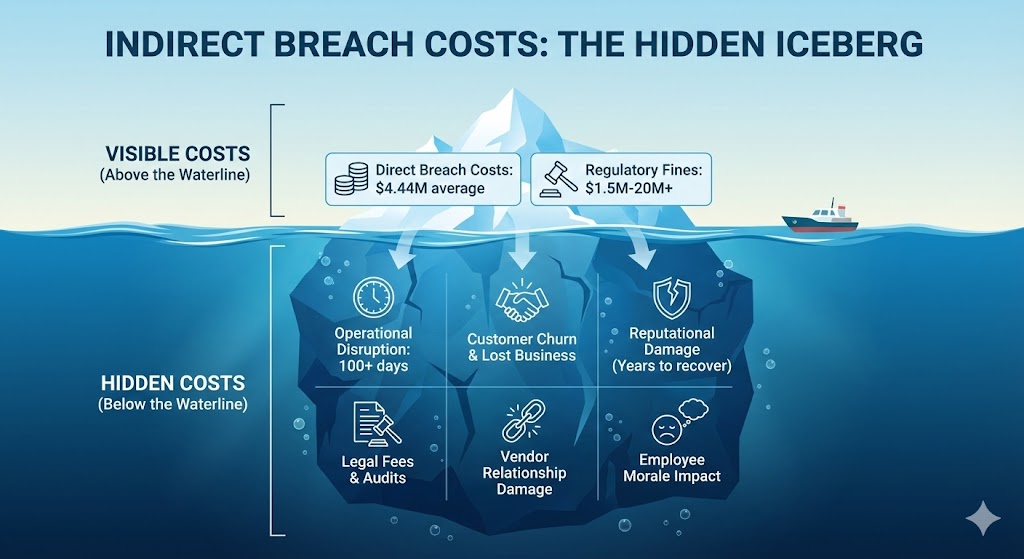

The Catastrophic Costs of NOT Implementing Private LLMs

While private LLM costs may seem substantial, they pale compared to the potential costs of a data breach caused by shadow AI usage.

Direct Breach Costs

The financial impact of data breaches has reached record levels:

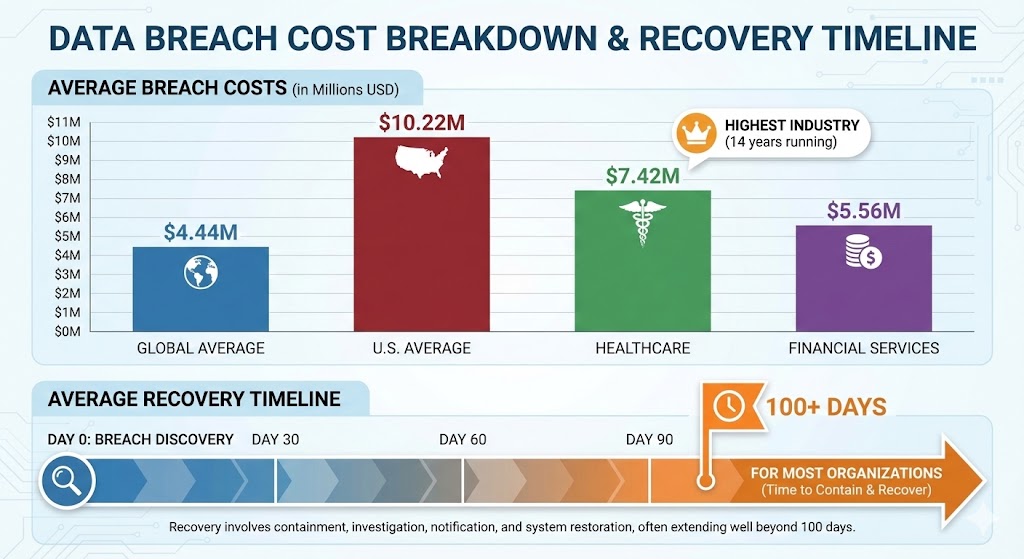

The global average cost of a data breach reached $4.44 million in 2025, while U.S. data breaches set a new record at $10.22 million, increasing by 9.2% from $9.36 million in 2024 (12).

For heavily regulated industries, the costs are even more severe:

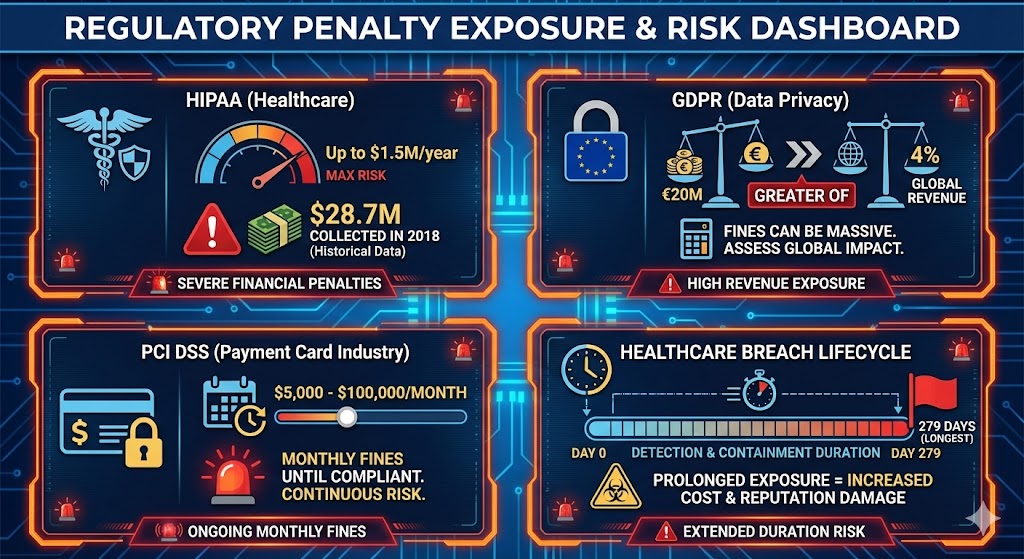

Healthcare: Healthcare breaches average $7.42 million per incident in 2025, making it the most costly industry for 14 consecutive years, with an average time to identify and contain of 279 days (13).

Financial Services: The financial sector faces the second-highest breach costs at $5.56 million, with attackers using sophisticated bank heist techniques targeting high-value assets (14).

Regulatory Penalties

Beyond direct breach costs, regulatory fines add substantial risk:

HIPAA Violations: Penalties can reach up to $1.5 million per year under HIPAA, with OCR collecting $28,683,400 in HIPAA fines and settlements in 2018, a record at the time (15).

GDPR Violations: The EU GDPR imposes a maximum fine of €20 million or 4% of annual global turnover, whichever is greater, for infringements (16).

PCI DSS: Breaches can result in fines of $5,000 to $100,000 per month under PCI DSS (17).

Indirect Costs

The hidden costs often exceed the direct financial impact:

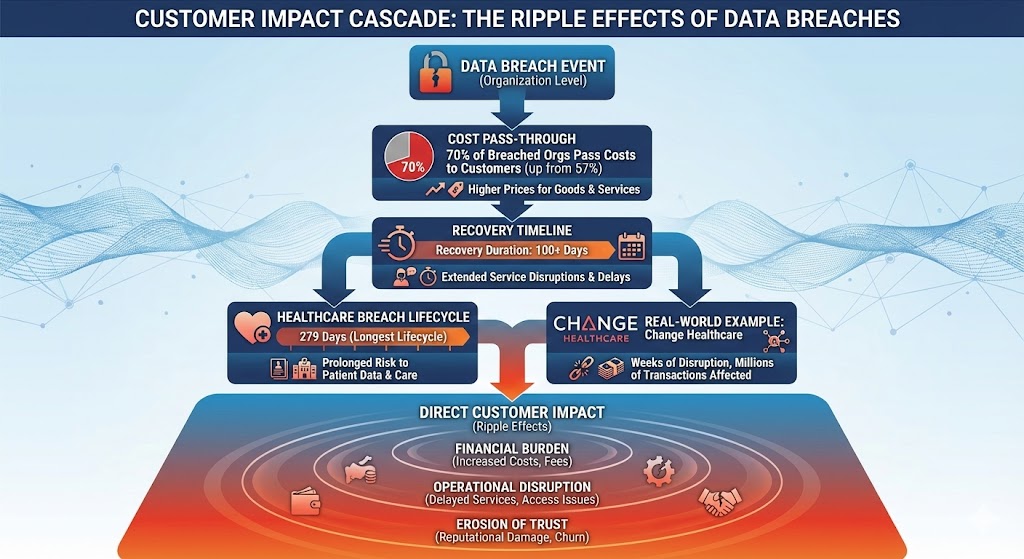

Operational Disruption: Almost all breached organizations report operational disruption, with the majority taking more than 100 days to recover from a data breach (18).

Customer Impact: In 2024, 70% of breached organizations passed increased costs on to customers, up from 57% in 2023 (19_.

Lost Business and Revenue: The Change Healthcare incident in early 2024 delayed patient billing and claims processing for weeks, affecting millions of transactions (20).

Long-term Reputational Damage: Trust, once lost, can take years to rebuild. Customers may switch to competitors, partnerships may dissolve, and the company’s brand equity can suffer lasting harm.

Implementing a Comprehensive AI Strategy

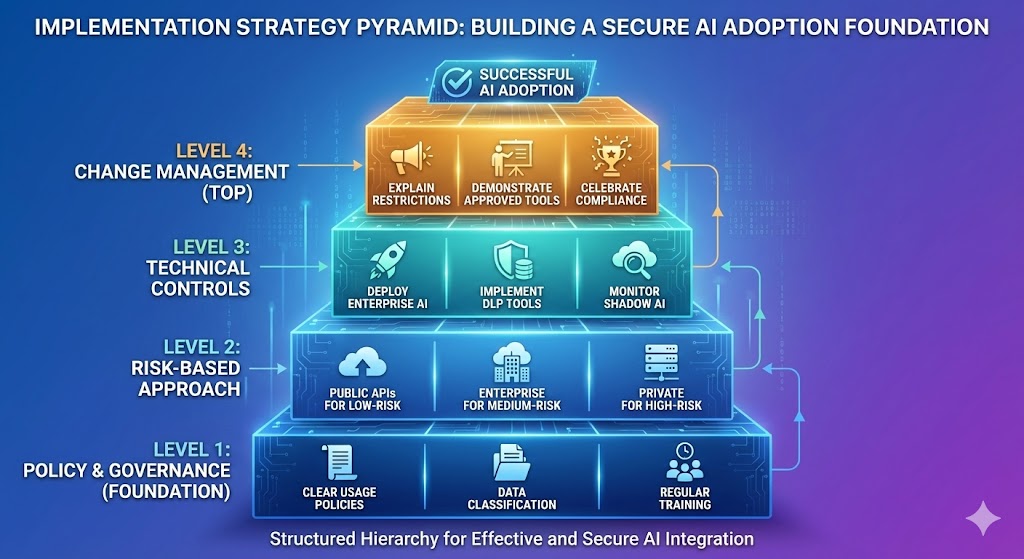

Organizations don’t need to choose between innovation and security. A comprehensive approach includes:

1. Policy and Governance

- Clear AI usage policies

- Classification of data sensitivity levels

- Approved tools for different use cases

- Regular training and communication

2. Technical Controls

- Deploy enterprise AI solutions with appropriate security

- Implement data loss prevention (DLP) tools

- Monitor for shadow AI usage

- Provide easy-to-use approved alternatives

3. Risk-Based Approach

- Public AI APIs for non-sensitive tasks

- Enterprise cloud AI for moderate-risk work

- Private self-hosted models for confidential data

- Regular security audits

4. Change Management

- Explain why restrictions exist

- Demonstrate approved tools are equally convenient

- Celebrate employees who follow policy

- Make it easier to do the right thing than to work around controls

Conclusion

The convenience of ChatGPT and similar AI tools makes shadow AI usage nearly inevitable without proper alternatives. With 70% of employees already using personal AI accounts without employer knowledge (21), organizations face a clear choice: proactively implement secure AI infrastructure or reactively deal with the consequences of a breach.

The math is straightforward. A comprehensive private LLM solution costing $150,000-400,000 annually provides complete data security, regulatory compliance, predictable costs, and peace of mind.

Compare this to the alternative: rolling the dice on a $4-10 million breach, plus regulatory fines, operational disruption, and reputational damage.

The question isn’t whether organizations can afford to implement private AI infrastructure. It’s whether they can afford not to.

References

- BusinessToday. (March 22, 2023). “Does your boss know? 70% of employees are using ChatGPT, other AI tools without employer’s knowledge.”

https://www.businesstoday.in/technology/news/story/does-your-boss-know-70-of-employees-are-using-chatgpt-other-ai-tools-without-employers-knowledge-374364-2023-03-22 - eSecurityPlanet. (October 9, 2025). “77% of Employees Leak Data via ChatGPT, Report Finds.” LayerX Security’s Enterprise AI and SaaS Data Security Report 2025.

https://www.esecurityplanet.com/news/shadow-ai-chatgpt-dlp/ - Microsoft Work Trend Index. (2024). “AI at Work Is Here. Now Comes the Hard Part.” Survey of 31,000 knowledge workers across 31 markets.

https://www.microsoft.com/en-us/worklab/work-trend-index/ai-at-work-is-here-now-comes-the-hard-part - Second Talent. (December 2, 2025). “AI in the Workplace Statistics and Trends for 2026.” Citing research from Harvard, MIT, BCG, and Harvard Business Review.

https://www.secondtalent.com/resources/ai-in-the-workplace-statistics-and-trends/ - Business.com. (November 8, 2023). “ChatGPT Usage Rates Among American Workers: Study.” Survey of nearly 2,000 American workers.

https://www.business.com/technology/chatgpt-usage-workplace-study/ - Wald AI. (January 10, 2026). “ChatGPT Data Leaks and Security Incidents (2023-2025): A Comprehensive Overview.”

https://wald.ai/blog/chatgpt-data-leaks-and-security-incidents-20232024-a-comprehensive-overview - Wald AI. (January 10, 2026). “ChatGPT Data Leaks and Security Incidents (2023-2025): A Comprehensive Overview.” Research on extracting memorized training data.

https://wald.ai/blog/chatgpt-data-leaks-and-security-incidents-20232024-a-comprehensive-overview - Wald AI. (January 10, 2026). “ChatGPT Data Leaks and Security Incidents (2023-2025): A Comprehensive Overview.” Dark web credential discovery.

https://wald.ai/blog/chatgpt-data-leaks-and-security-incidents-20232024-a-comprehensive-overview - OpenAI. (November 2025). “What to know about a recent Mixpanel security incident.” Retrieved from https://openai.com/index/mixpanel-incident/

- eSecurityPlanet. (October 9, 2025). “77% of Employees Leak Data via ChatGPT, Report Finds.” Quote from Or Eshed, CEO of LayerX Security.

https://www.esecurityplanet.com/news/shadow-ai-chatgpt-dlp/ - Ptolemay. “LLM Total Cost of Ownership 2025: Build vs Buy Math.”

https://www.ptolemay.com/post/llm-total-cost-of-ownership - HIPAA Journal. (July 30, 2025). “Average Cost of a Healthcare Data Breach Falls to $7.42 Million.” IBM 2025 Cost of a Data Breach Report.

https://www.hipaajournal.com/average-cost-of-a-healthcare-data-breach-2025/ - HIPAA Journal. (July 30, 2025). “Average Cost of a Healthcare Data Breach Falls to $7.42 Million.” IBM 2025 Cost of a Data Breach Report.

https://www.hipaajournal.com/average-cost-of-a-healthcare-data-breach-2025/; Sprinto. (October 24, 2025). “Healthcare Data Breach Statistics: HIPAA Violation Cases and Preventive Measures in 2025.” https://sprinto.com/blog/healthcare-data-breach-statistics/ - DeepStrike. (December 7, 2025). “Data Breach Statistics 2025: Costs, Trends, and Key Findings.” https://deepstrike.io/blog/data-breach-statistics-2025

- Accutive Security. (June 13, 2025). “Data Breach Statistics 2024: Penalties for Major regulations.” https://accutivesecurity.com/data-breach-statistics-2024-penalties-and-fines-for-major-regulations/; Sprinto. (October 24, 2025). “Healthcare Data Breach Statistics.”

https://sprinto.com/blog/healthcare-data-breach-statistics/ - Mitigata. (July 17, 2025). “Cost of Data Breaches in 2025.”

https://mitigata.com/blog/cost-of-a-data-breach/; Accutive Security. (June 13, 2025). “Data Breach Statistics 2024: Penalties for Major regulations.”

https://accutivesecurity.com/data-breach-statistics-2024-penalties-and-fines-for-major-regulations/ - Accutive Security. (June 13, 2025). “Data Breach Statistics 2024: Penalties for Major regulations.” https://accutivesecurity.com/data-breach-statistics-2024-penalties-and-fines-for-major-regulations/

- HIPAA Journal. (July 30, 2025). “Average Cost of a Healthcare Data Breach Falls to $7.42 Million.” IBM 2025 Cost of a Data Breach Report.

https://www.hipaajournal.com/average-cost-of-a-healthcare-data-breach-2025/ - The HIPAA Guide. (November 8, 2024). “Data Breach Costs Increase by 10% to $4.9 Million.” https://www.hipaaguide.net/data-breach-costs-2024/

- Sprinto. (October 24, 2025). “Healthcare Data Breach Statistics: HIPAA Violation Cases and Preventive Measures in 2025.”

https://sprinto.com/blog/healthcare-data-breach-statistics/ - BusinessToday. (March 22, 2023). “Does your boss know? 70% of employees are using ChatGPT, other AI tools without employer’s knowledge.”

https://www.businesstoday.in/technology/news/story/does-your-boss-know-70-of-employees-are-using-chatgpt-other-ai-tools-without-employers-knowledge-374364-2023-03-22

About This Article

This analysis is based on extensive research into AI security practices, data breach costs, and private LLM deployment options. Organizations should consult with legal counsel, security professionals, and AI specialists to determine the right approach for their specific situation. The technology landscape evolves rapidly, and costs and capabilities continue to change as the market matures.